Back in September, I published a blog post on structuring a Go project in a fairly shallow way but deep enough for anyone to use as a basis. Back then I intended on writing one huge CloudFormation post which I would have spent much more time structuring. Now that the application part is out, I can focus on what I originally wanted to write about: CloudFormation. However, after writing the original draft of this post, I realised that this would need a third, fourth part tackling CloudFormation. We need to make that Go project cloud-ready. Don’t worry I actually finished writing the whole series as I’m about to publish this. Expect them in the next few weeks.

Now, we will go from the todo list server and get it deployed to AWS using CloudFormation. However, I will tweak the codebase by introducing a new storage option to justify the future use of CloudFormation.

That new storage option will allow me to play with DynamoDB to store and retrieve the tasks. I’ll push an event when a task is created or completed. The code to do both these things will fit fine in a new storage package.

Containerising our application

First things, first, I will copy the codebase from the last September post. Before we introduce our dependencies, the first thing we need to do in this post is containerising our application. This means that we will have a Dockerfile which will allow us the run the application within a Docker container which we can deploy into any cloud environment when the time comes.

In this case, since we’re using a very simple application, we can use a slightly modified version of the default Golang Dockerfile example provided on the Docker Hub.

FROM golang:1.15

WORKDIR /go/src/app

COPY . .

RUN go get -d -v ./cmd/server

RUN go install -v ./cmd/server

CMD ["server"]The first change I put in there is changing the base image tag to use Golang 1.15 as it is the version I am currently using. You can check what version is on your machine with go version.

I modified the commands to retrieve dependencies and to install the application so that these directly target the main package located at PROJECT_ROOT/cmd/server. Upon building that package, a binary named server will be created and put into the local bin folder. This is the reason why I can set the binary named server as the command to execute by default.

Following the recommendations from the golang-standards/project-layout repository for build files, I created this Dockerfile at PROJECT_ROOT/build/package/Dockerfile. You can then build the image using the following command which assumes you are running it from your project root.

docker build -f build/package/Dockerfile -t cloudreadytodo:1.0 .Below you can see the full build from my local environment. The Dockerfile basically copies the code then retrieves code dependencies. Then it builds and installs the application. Eventually, it sets the default execution of the image to run the application.

Now let’s run the image and make sure it works using the Postman collection implemented during the last post about testing.

Let’s create a new container using our newly built image:

docker run --rm -p 8080:8080 cloudreadytodo:1.0 Now you should see the application startup message which will confirm that all is well.

These API tests will provide a way nothing breaks when adding our AWS layer for local testing. The good thing is that the API won’t change therefore the Postman collection put together last time will work just fine for that purpose. The port is the same so no need to change anything here.

Now we’re ready to move into the heart of our changes, the AWS stack.

Introducing our local AWS stack

Create a local AWS profile for development

In order to avoid passing our fictictious credentials over and over to each command in this post, we will create an AWS profile we can pass on its own to said commands so that it our credentials get retrieved through it.

We can do so by using the AWS configuration command below:

aws configure --profile localdev

As in the capture above fill the prompt with example values, any value you like. However, you need to set the region to “us-east-1”. Now let’s create our local stack.

Creating the local AWS stack using localstack

Before we write the new storage code, we need a way to test it. Unit tests are great but you only truly know your code will work if you can run it against an environment matching what you get when releasing your software. Even though AWS offers a fair amount of free usage we can do one better by running AWS locally.

Enters localstack. What is localstack? Well, localstack provides you with local instances of various Amazon Web Services in the free version which is available on Github. It. Here, I will need only one, DynamoDB, which is great since it is available in the free version.

There are a few ways to use localstack but the one I find faster and less invasive is to use their repository docker-compose file. I copied the contents into my local deployment/local folder. Once done you need to pick a folder which will be your temporary storage folder which localstack will use. In my case, I use a simple temporary folder named temp.

From there all you need to do is running the compose-up command. The env var is set in a *NIX way so if you’re on Windows you will be able to do that using a *NIX-like shell git bash which I believe is shipped with Git for Windows.

TMPDIR=./deployment/local/tmp PORT_WEB_UI=8181 docker-compose -f ./deployment/local/localstack-docker-compose.yml upThat command will use a local directory as a temporary resource for localstack and set the localstack UI port to 8181 instead of 8080 which would conflict with our application port. Even though the UI is deprecated and would require a different localstack image for execution we still need that override.

The setup should take a couple of seconds to spin up our local AWS services.

Now the stack is up, if you look at your folders you will notice that it created a few files within deployment/local:

You can get these ignored by git while conserving the docker-compose file by adding these lines to your .gitignore:

deployment/local/*

!deployment/local/docker-compose.ymlNow that localstack is set, let’s create our local DynamoDB table!

Bonus step for DynamoDB: Create table through UI

As amazing as localstack may be, it doesn’t offer the option to access services through a UI to manipulate them. This is fine as they suggest a way of tacking this for DynamoDB. The main issue is that you need NodeJS installed. If you don’t have it, this link will redirect you to a NodeJS download page from where I trust you can figure the installation process.

Now that Node is installed, you need to install a Node package globally. This package is dynamodb-admin. It will allow you to access your local DynamoDB and allow to do stuff like creating your tables. To install it you need to run this command:

npm install -g dynamodb-adminAfter the installation completes, you can start it by running that command:

DYNAMO_ENDPOINT=http://localhost:4566 AWS_PROFILE=localdev dynamodb-adminFailing to specify the DYNAMO_ENDPOINT variable will cause dynamodb-admin to try and call actual AWS endpoints.

It will take a few seconds to start.

The way this startup output looks I’m guessing that people mostly use that to access actual DynamoDB tables. Let’s open this sucker.

The UI is fairly straightforward. We can now create a table without having to do it programmatically. Clicking on “Create Table” will give you the table creation UI.

There, I’ll go with id as Hash Attribute Name (basically the field used to compute the partition key). It doesn’t matter for this example but you will want to be careful when designing production databases. After pressing “Submit”, you will be able to see the table was created.

Now that our local environment is fully setup, we can implement the new storage code without any need to connect to actual AWS instances.

Implementing the new storage code

Missing unit tests for fast testing

As I am a terrible person, I did not write any tests last time. Well, not until that late October post on testing where I introduced API tests using Postman.

Since we’re introducing new storage layer these tests will come in very handy. On top of these I decided to add some unit tests to have some lower level validations on the application logic. As you can see below the added tests pass.

I kept these to a minimum as this thing once meant to be a single blog post became four and generates a huge amount of work. Still, the key bits of logic are covered, these are within the completion and the creation packages.

You’re not really cloud-ready until you have a way to validate your application behaviour will remain the same once up and running. Unit tests offer coverage but no absolute certainty as to what happens in production. Unit tests are much better as they can allow finding issues in a more localised way which helps to fix bugs faster.

Introducing the code changes you won’t see here but can check on a repo later

Now that we have our full testing base, let’s introduce our AWS storage package. All I need now is a new main file to set up the application and a new storage package using AWS libraries for DynamoDB. As I’m using Go modules, installing the Go AWS SDK is as easy as running that command:

go get github.com/aws/[email protected]After implementing the new AWS package using the AWS Go SDK, all that needs to happen is modifying the main package to use the AWS storage implementation rather than the existing in-memory one.

The difference in the main package is that we replace the in-memory storage layer that we here:

s := memory.NewStorage()With the code to setup our AWS storage layer:

var tableName string

var awsEndpoint string

flag.StringVar(&tableName, "tableName", "TodoList", "DynamoDB table")

flag.StringVar(&awsEndpoint, "endpoint", "", "AWS endpoint (used for local dev)")

flag.Parse()

s := aws.NewStorage(tableName, awsEndpoint)Here I introduce some flags that will allow for specifying the AWS region and endpoints so that this code can be executed the same way locally or on AWS.

Let’s build and execute our new server locally before we update our Docker image.

go build -v ./cmd/server/

AWS_PROFILE=localdev ./server -endpoint http://localhost:4566The endpoint URL is the one provided through localstack.

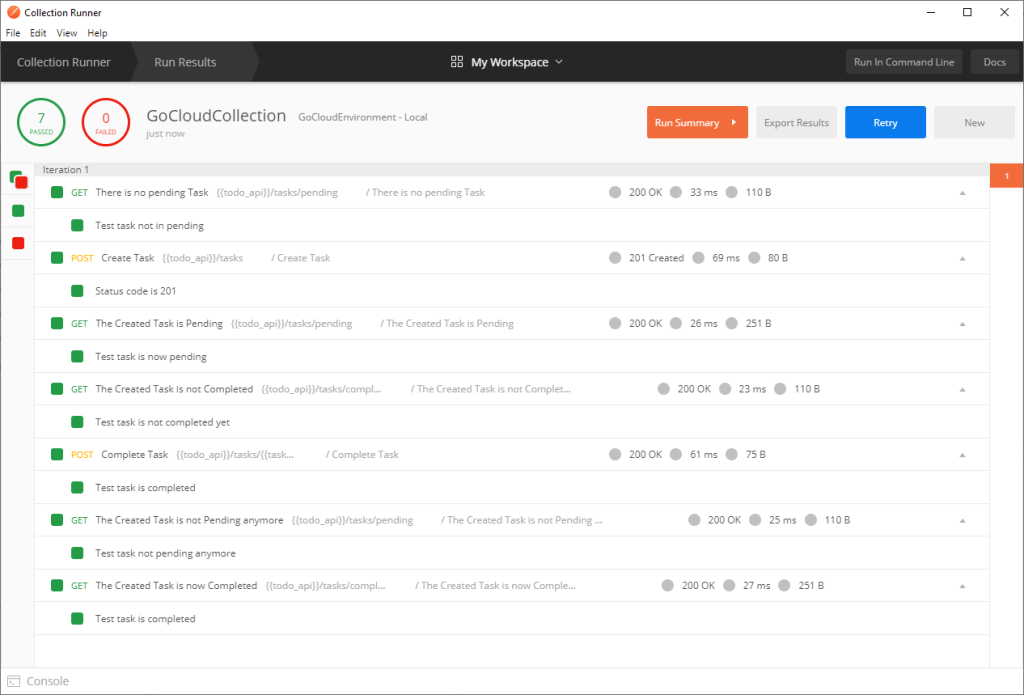

Now that our stack and updated applications are running, we can run our Postman collection tests.

Every test runs green which means nothing broke and our new changes work with the local stack. You will notice that the response times are slightly higher as here we don’t deal with in-memory storage but with localstack to handle our tasks.

Becoming fully cloud-ready with a final Dockerfile update

Now that we have a version of our application which works with localstack, we can update our Dockerfile to take into consideration the region and endpoint parameters.

FROM golang:1.15.3-alpine3.12

EXPOSE 8080/tcp

WORKDIR /go/src/app

COPY . .

RUN go get -d -v ./cmd/server

RUN go install -v ./cmd/server

CMD [ "sh", "-c", "server", "-endpoint", "$AWS_ENDPOINT" ]Here I added the environment variables to set the region and endpoint at the top alongside the matching flags at in the CMD. Now let’s build the image once more but with a new tag.

docker build -f build/package/Dockerfile -t cloudreadytodo:2.0 .Finally, we can run the image by passing the environment variables we just introduced:

docker run --rm -p 8080:8080 -e AWS_REGION=us-east-1 -e AWS_ENDPOINT=http://localhost:4566 -e AWS_ACCESS_KEY_ID=example -e AWS_SECRET_KEY=example cloudreadytodo:2.0 Here we definitely need the explicitly set our dummy AWS credentials variables as the container will not have access to our machine’s profile.

Let’s run our Postman collection one more time:

All green! We now are cloud-ready. CloudFormation-ready. Thanks for reading and see you for the final part of the Go Cloud trilogy where we deploy all this to AWS using CloudFormation. If you want to read the first entry you can click here. You can find the code and Postman files over here.

Note: The final code looks a bit different from bits shown in the post as I decided to refactor the configuration part in order to allow for reuse of the AWS configuration in order to create the table locally with a simple command without using UI.

See you next time which now that I think about it might not be the final chapter of that Go Cloud thing.

Cover by Naveen Annam from Pexels